Australia has a real opportunity to learn from the UK social investment market. We have an opportunity to learn from and replicate things that went well. We also have a precious opportunity to correct some of the things that didn’t go so well, rather than repeat the mistakes.

Australia has a real opportunity to learn from the UK social investment market. We have an opportunity to learn from and replicate things that went well. We also have a precious opportunity to correct some of the things that didn’t go so well, rather than repeat the mistakes.

The Alternative Commission on Social Investment was established to take stock, investigate what’s wrong with the UK social investment market and to make some practical suggestions for how the market can be made relevant and useful to a wider range of charities, social enterprises and citizens working to bring about positive social change. It released its report on March 27 2015. Download the report and its summary of recommendations at the bottom of this webpage http://socinvalternativecommission.org.uk/home/.

This is an important report because it is a reflection on the UK Social Investment market by social enterprises, using information gathered from social enterprises, investors and research. It was funded by a grant from the Esmee Fairbairn Foundation, the CEO of which is Caroline Mason CBE, former Chief Operating Officer of Big Society Capital (she remains on their advisory board).

The Commission is a response to growing recognition in the UK that the social investment market is not meeting the needs of the organisations and individuals it seeks to serve. “There is a real feeling that the social investment community isn’t listening to the people on the front line” – Jonathan Jenkins, Chief Executive, Social Investment Business.

The five key underlying questions the Commission addressed are:

- What do social sector organisations want?

- Can social investment, as currently conceived, meet that need?

- What’s social about social investment?

- Who are social investors and what do they want?

- What can we do to make social investment better?

Particularly relevant lessons for Australia from the Alternative Social Investment Commission’s report are:

1. Minimise the hype: e.g. “Best available estimates are that the domestic market could reach A$32 billion in a decade (IMPACT-Australia 2013)”. This is not a forecast, but the most optimistic of goals. We can track the progress we do make, rather than set ourselves up for failure and disappointment. We can likewise cease talk of social investment filling the gap left by funding cuts unless there is any evidence that this has occurred.

2. Increase investor transparency: information on investments made will help organisations seeking finance navigate investors more efficiently. It will also help coordinate efforts and highlight gaps between investors.

3. Don’t just replicate the mainstream finance market for social investment: some mainstream finance models don’t transpose well to social investment – we can develop a social finance market that is fit-for-purpose and takes advantage of modern technology. There are lots of left-field suggestions in the report.

4. Understand the market we seek to serve: we can avoid some of the ‘us and them’ mentality that has arisen in the UK by seeking and listening to the voice of the investee in order to develop social investment that is useful to them.

5. Fill the gaps: similar to the UK, there is a funding gap for small, high-risk, unsecured investments. If this is the type of investment we are going to talk about all the time, let’s provide it.

6. Redefine social investors: social investors don’t have to be just rich people and financial institutions. In order to achieve the three points above, we should encourage and highlight social investments by social purpose organisations, individuals who are not ‘wholesale’ or ‘sophisticated’ investors and superannuation funds. Individual investment currently occurs in Australia through cooperatives, mutuals and small private investment. Crowd-sourced equity funding reform is being considered by the Australian Treasury and may reduce the regulatory burden and cost associated with making investments available to individuals who aren’t already rich.

This paper enables us to learn from the mistakes of the UK market. It should also encourage us to embrace our own failures and learn as we go. We can build an Australian social investment market that better meets the needs of social purpose organisations and the communities they serve.

There are actually lots of great ideas in the paper not elaborated on here – heaps of ideas for organisations and initiatives that could translate well to Australia.

If you would like to read my analysis of how each of the 50 recommendations applies to the Australian context, please download this PDF table of recommendations. Or see each numbered recommendation below with my comments in colour.

Priority recommendations for Australia

2. Explain if and how social value is accounted for within your investments – do you expect investees to demonstrate their impact as a condition of investment? Do you offer lower interest rates based on expected impact? Are you prepared to take bigger risks based on expected impact? (Big Society Capital, SIFIs)

- For organisations specialising in social investment this should be doable and helpful for their potential investees. A range of interest rates across investments and information about why some are higher than others could also be very helpful.

23. Minimise all forms of social investment hype that might inflate expectations and under no circumstances imply that social investment can fill gaps left by cuts in public spending (Cabinet Office, DWP, MoJ, HMT ministers and officials, Big Society Capital, Big Lottery Fund, NCVO, ACEVO, Social Enterprise UK)

- The repetition of statements like “Best available estimates are that the domestic market could reach A$32 billion in a decade (IMPACT-Australia 2013)” should be discouraged. Cuts in public spending may be a driver for social investment, but there is no basis for the promise that their effects will be mitigated by social investment.

24. Avoid treating the development of the social investment market as an end itself – social investment is a relatively small phenomenon overlapping with but not the same as ‘access to finance for social sector organisations’ and ‘increasing flows of capital to socially useful investment’. These wider goals should be prioritised over a drive to grow the social investment market for its own sake– (Cabinet Office, Big Society Capital)

- All those who are involved in ‘building the market’ should clarity their efforts in terms of their contribution to these wider goals. Many already do. The second goal may need some rewording.

30. Provide opportunities and support for citizens to invest in socially motivated pensions (HM Treasury)

- There exists some opportunity for citizens to invest in socially motivated superannuation, but this could be broadened across more superannuation funds.

32. Social investors should better reflect and understand the market they are seeking to serve by getting out and about, meeting a broader range of organisations – particularly organisations based outside London – recruiting from the sector and cutting costs that deliver no social value – (SIFIs)

- Social investment organisations in Australia may have less of a problem with this than in the UK, but certainly there is little evidence of the financing needs of potential investees in social investment policies and strategies so far

36. Don’t replicate expensive models from mainstream finance, do explore how to use social models and technology to keep costs down (Big Society Capital, SIFIs)

- There is opportunity to develop a social finance market that is fit-for-purpose, rather than simply transposed from conventional finance

46. Ignore hype about the social investment market – (Umbrella bodies, SSOs)

- The hype is still so pure in Australia that many social sector organisations are repeating it, with very few doubting its validity yet

50. Understand that just being socially owned may not be enough – you don’t have to care about impact frameworks but need to recognise that an investor will want to know how you are managing your success at what you claim to do (SSOs)

- Some definition of success and measure of progress may be required- it’s worth being aware of this and preparing when approaching investors

34. Focus on additionality and filling the gaps esp small, patient risky, equity-like – (Big Society Capital, Key Stakeholders, SIFIs)

- Even in our nascent market, these gaps are emerging.

42. ‘Crowd in’ people who aren’t rich – support models of social investment that enable investments from people with moderate incomes and assets, and remove barriers that prevent smaller investors from accessing tax breaks such as SITR (HMT, Cabinet Office, Big Society Capital, SIFIs)

- Currently occurring largely via cooperative models. For other models, reliant on private investment, regulatory change (currently being considered) or full prospectus issuing. Tax breaks are not currently available but may become so.

44. Back yourselves and invest in each other – Social sector organisations should consider cutting out the middleman and developing models where they can invest in each other, where legal and appropriate – (SSOs)

- There are examples of this occurring, but potential for more. Australian social purpose organisations are wealthy by UK standards and there is greater potential for this here.

Directly applicable to Australia

1. Publish information on all social investments across all investors – with investees anonymised if required (Big Society Capital, SIFIs, the Social Investment Forum)

- All self-identifying social investors (institutions, foundations and individuals) could be encouraged to do this. Could also be useful for it to be collated somewhere as a database. Consideration would need to be given for negative consequences. Has begun to a certain degree e.g. SEDIF progress report 2013 http://docs.employment.gov.au/node/32639 and WA Social Enterprise Fund Grants Program http://www.communities.wa.gov.au/grants/grants/social-enterprise-fund-grants-program/Pages/default.aspx The suggestions from delegates at roundtables are useful. They called for upfront transparency from social investors on:

- what they will and won’t fund

- where the money goes

- the terms of investment

- how to present a case for investment

- what the application process will involve.

6. Be clear about terminology – what specifically do you mean by, for examples, ‘social investment’, ‘impact investment’, ‘finance for charities and social enterprise’ – and consistent across government departments (Cabinet Office, Big Society Capital, SIFIs, Big Lottery Fund, Umbrella Bodies)

- Many people who use these terms currently define them, but it may still be useful to encourage this practice as the Australian market develops

7. Clarify how much is in dormant bank accounts – look at other unclaimed assets, insurance, Oyster cards, Premium Bonds, and other products. (Cabinet Office, Big Society Capital)

- Australian governments could research unclaimed public funds that could be put to good use

9. Publish details of investments made on your website – to enable Social Sector Organisations to understand the size and type of investments you make (SIFIs)

- Organisations, families and individuals that make social investments could be encouraged to do this

10. Be transparent about costs – be clear about what fees you charge and why (Big Society Capital, SIFIs)

- Some social investors do this already but it could be encouraged generally as good practice

25. Consider the ‘wider universe’ of socially impactful investment including additional research on the £3.7 billion investment in SSOs primarily from mainstream banks (Umbrella bodies, Researchers, Big Society Capital, Mainstream Banks)

- Australia has very little research on socially impactful investment at all, so this is a good consideration as we develop new research projects.

26. Consider how SSOs can be better supported to access mainstream finance through guarantees and other subsidies, and through information and awareness-raising (HMTreasury, Cabinet Office, Big Lottery Fund)

- Unsure who might take this on, but good to consider for organisations that want to support social sector organisations.

28. Promote greater focus on socially motivated investment in HMT, BoE, and FCA and BIS – (Politicians, Cabinet Office)

- Key institutions for mainstream investment market should be involved in or at least invited to social investment initiatives.

31. Work together in equal partnership with the social sector to develop a set of principles for what makes an investment ‘social’ – (Cabinet Office, Big Society Capital, Big Lottery Fund, SIFIs, Umbrella bodies, the Social Investment Forum, SSOs)

- This could be one that Impact Investing Australia could lead.

37. Explore alternative due diligence models including developing common approaches to due diligence for different types of social investment – (Social Investment Forum, SIFIs, Big Society Capital)

- Social investment organisations may have some learning to share – unsure.

39. Support the development of a distinctively social secondary market for social investments where early stage investors will be able to sell on investments to investors with similar social commitment but less appetite for risk (Cabinet Office, Big Society Capital, Access)

- This does not exist in Australia (although there are instances where it has occurred) – could be a good idea to build/test with our tiny market in anticipation of growth. Not obvious who would take this forward.

45. Large asset-rich social sector organisations should consider supporting smaller organisations to take on property either by buying it for them or helping them to secure it by providing a guarantee facility where legal and appropriate (SSOs)

- This may already occur – seems logical

49. Identify what is ‘social’ about the investment approach that you are hoping for from investors: are you expecting cheaper money, higher risk appetite, more flexibility, more ‘patient’ capital, wrap around business support? (SSOs)

- This should help both investees and investors define each deal and subsequently, the market

Possibly applicable to Australia

11. Be clear about what is ‘social’ about you approach to investment – what is it that you are doing that a mainstream finance provider would not do – and why is it useful? Mandatory statement of fact sheet. Report on overheads. (Big Society Capital, SIFIs)

- It is not obvious who would make this mandatory or why. But the question is interesting and a standardised ‘fact sheet’ could help potential investees navigate the investor market.

22. More funders should consider their possible role in social investment wholesaling including British Business Bank, Esmee Fairbairn, Unltd, Nesta, Wellcome Trust (Funders)

- There may be potential for some wholesaling, perhaps by superannuation funds or other large funds with a social investment remit

33. Employ more social entrepreneurs and others with social sector experience – take on more staff with direct, practical experience of using repayable finance to do social good and enable them to use that experience to inform investment decisions (Big Society Capital, SIFIs)

- This may be something to keep in mind as employment by social investment organisations increases, as does number of people with repayable finance experience, but might be a bit early and this may not be a problem here

38. Support the development of Alternative Social Impact Bonds options include: (a) models which enable investors from the local community to invest relatively small amounts of money with lower expected returns making them less expensive in the long-term to the public purse, more attractive and replicable; (b) a waterfall approach that sees X% of performance above a certain level reinvested in the enterprise the community (Cabinet Office, Big Society Capital, SIFIs, SSOs)

- The paper doesn’t expand on these suggestions. (a) is relatively clear and in Australia relies on either regulatory change or small private investments (b) is not expanded on in the report and is thus unclear – may already be happening under a couple of different guises

43. Create a ‘Compare the market’/’trip advisor’ tool for social investment – enabling organisations to rate their experiences and comment – (Umbrella bodies and SSOs)

- While nice in theory, there may not be enough social investors and deals made for this to be meaningful. Could be a good thing to start and build over time

47. Go mainstream – if looking for investment, consider banks and other investors and not just specifically social investment (SSOs)

- The bulk of financing for social purpose organisations in Australia is mainstream banks and investors

48. Before seeking investment, work out whether you are looking for repayable investment or whether you are looking for a grant – (SSOs)

- There is no clear evidence that this solves a problem we have in Australia

Unsure of applicability

8. Publish asset management strategies – including details of how endowments are invested in a socially and environmentally responsible manner. (Big Society Capital, SIFIs)

- Unsure what effect would be in Australia

27. Apply an added value test before supporting funds and programmes designed to develop ‘the social investment market’, be clear about the likely social outcomes that social investment offers that could not be better delivered another way (Cabinet Office, Big Lottery Fund)

- Very difficult to be clear that social outcomes could not be better delivered in another way and would not like to discourage simultaneous initiatives

29. Consider providing guarantees for social investment via crowdfunding platforms based on clear position on what ‘social investment’ means in this context (Cabinet Office, Access)

- At the moment Australians cannot invest in Australian organisations via crowdfunding platforms

35. Consider the risk of the social investment market failing to make a significant number of demonstrably social investments at all alongside the risk of some of those investments being unsuccessful (Big Society Capital, SIFIs)

- This is very broad and how it might be implemented is unclear

Not applicable

3. Explain who Big Society Capital (BSC)- backed market is for – Be clear about how many social sector organisations can realistically expect to receive investment from the BSC backed market (assuming it works). If it’s 200, be honest about that (Politicians, Cabinet Office, Big Society Capital)

- No Big Society Capital equivalent

4. Explain what Big Society Capital (BSC)-backed market is for – Be clear on policy positions on crowding in/crowding out – is the point of BSC to bring mainstream investors in or grow the social investment market to crowd them out? (Cabinet Office, Big Society Capital)

- No Big Society Capital equivalent

5. Explain the relationship between Big Society Capital and the Merlin banks – What is the banks role (if any) in governance and strategy? Under what circumstances would they receive dividends? (Cabinet Office, Big Society Capital)

- No Big Society Capital equivalent

12. Reconsider the role of Big Society Capital – prioritise building a sustainable and distinctively social investment market over ‘crowding in’ institutional finance into a new market doing – (Big Society Capital, Cabinet Office)

- No Big Society Capital equivalent

13. Consider splitting the investment of Unclaimed Assets and Merlin bank funds. Unclaimed Assets, allocated by law to Social Sector Organisations, could be invested on terms that better meet demand than currently, while Merlin bank funds could be invested in a wider group of organisations, with a focus on positive social value – (Big Society Capital, Cabinet Office)

- No Big Society Capital equivalent

14. Consider demarcating the unclaimed assets spending as ‘social investment’ and the Merlin funds as ‘impact investment’ – (Cabinet Office, Big Society Capital)

- No Big Society Capital equivalent

15. Particularly consider investing some Merlin funds in CDFIs & credit unions that provide finance for individuals and mainstream businesses in response to social need (Big Society Capital)

- No Big Society Capital equivalent

16. Bear more transactions costs – particularly those costs which are imposed on SIFIs through demands for extensive legal processes (Big Society Capital)

- No Big Society Capital equivalent

17. In the event that it becomes profitable, before paying out dividends to shareholders Big Social Capital should allocate 50% of profits into a pot of funding to reduce transaction costs for SIFIs enabling them to reduce the cost of finance for SSOs (Big Society Capital)

- No Big Society Capital equivalent

18. Be more flexible in supporting SSOs to engage with public sector outsourcing and be supported by policymakers to do so learning lessons from the experience of the MoJ Transforming Rehabilitation fund (Big Society Capital)

- No Big Society Capital equivalent

19. Consider democratising Big Society Capital board – Or at least be more open and clear about who has controlling stakes and vetoes within its structure . Consider how to make both board and staff team more representative of the sectors that they serve (Big Society Capital, Cabinet Office)

- No Big Society Capital equivalent

20. Change the name ‘Big Society Capital’ to something less politically charged – (Big Society Capital, Policymakers)

- No Big Society Capital equivalent

21. Consider whether all remaining funds in dormant bank accounts need to be invested in Big Society Capital or whether remaining funds could be used in other ways – for example, creating local or regional social investment funds controlled by local people (Cabinet Office)

- No Big Society Capital equivalent

40. Consider the practicality of establishing a simple registration and regulation system for organisations eligible for social investment – as supported by unclaimed assets – with unambiguous criteria for registration of organisations who consider themselves to be ‘social’ but not use a recognised social corporate structure – (Cabinet Office)

- It is not obvious what this registration would enable in Australia as our market currently exists

41. Listen to the people – find out what (if anything) citizens in general think about social investment (Cabinet Office, Big Society Capital, SIFIs)

- Australian citizens in general may not think about social investment, more than likely they’ve never heard of it. It is not obvious who would collect this information and what they would do with it

Response

Civil Society Finance, 27 March 2015, Social investment problems include ‘too much hype and hubris and not enough transparency’ says report

Third Sector, 27 March 2015, Growing the social investment market requires more investor transparency, report says

Social Investment Business, 27 March 2015, Jonathan Jenkins Responds to the final Report from The Alternative Commission on Social Investment

There have been eight social impact bond (SIB) contracts signed so far in Australia (I use this

There have been eight social impact bond (SIB) contracts signed so far in Australia (I use this

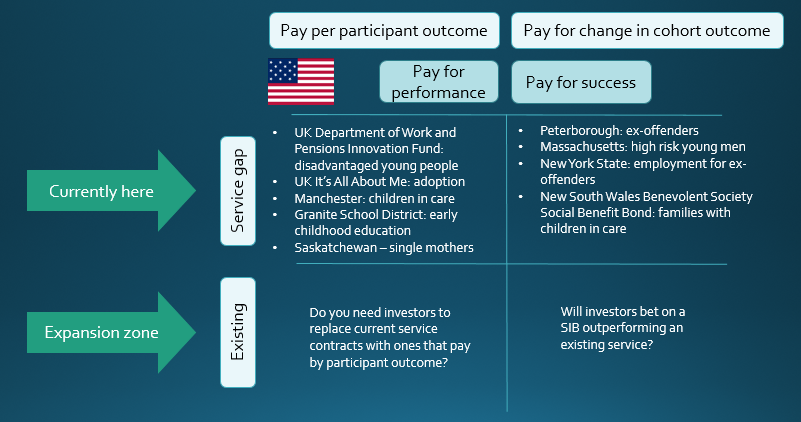

So could a pay-per-participant-outcome SIB replace an existing contract?

So could a pay-per-participant-outcome SIB replace an existing contract?

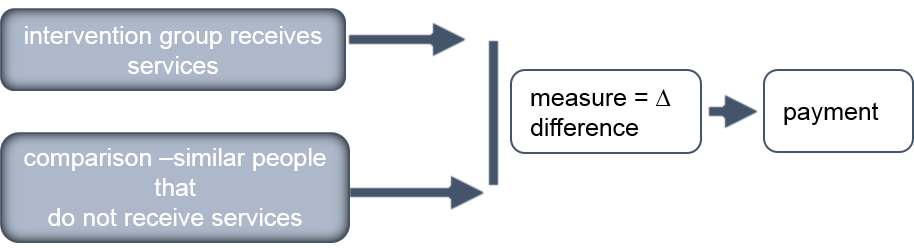

he past few weeks as to whether Rikers Island was a success or failure and what that means for the SIB ‘market’. You can read the Huffington Post learning and analyses from

he past few weeks as to whether Rikers Island was a success or failure and what that means for the SIB ‘market’. You can read the Huffington Post learning and analyses from

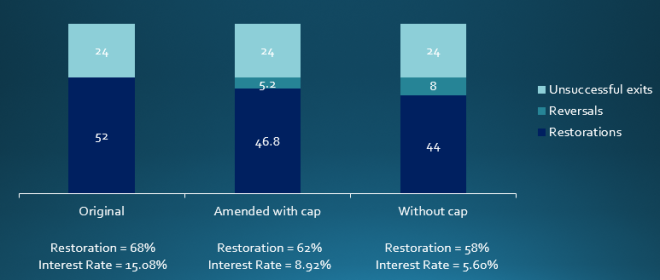

One of my key lessons from my Newpin practice colleagues was the importance of their relationships and conversations with government child protection (FACS) staff when determining which families were ready for Newpin and had a genuine probability (much lower than 100%) of restoration. When random allocations were first flagged I thought ‘this will bugger stuff up’.

One of my key lessons from my Newpin practice colleagues was the importance of their relationships and conversations with government child protection (FACS) staff when determining which families were ready for Newpin and had a genuine probability (much lower than 100%) of restoration. When random allocations were first flagged I thought ‘this will bugger stuff up’. n program to ‘get’ a SIB and that we were thinking ethically through all aspects of the program. We were a team and all members knew where they did and didn’t have expertise.

n program to ‘get’ a SIB and that we were thinking ethically through all aspects of the program. We were a team and all members knew where they did and didn’t have expertise. Australia has a real opportunity to learn from the UK social investment market. We have an opportunity to learn from and replicate things that went well. We also have a precious opportunity to correct some of the things that didn’t go so well, rather than repeat the mistakes.

Australia has a real opportunity to learn from the UK social investment market. We have an opportunity to learn from and replicate things that went well. We also have a precious opportunity to correct some of the things that didn’t go so well, rather than repeat the mistakes.